➺ ffmprovisr ❥

Making FFmpeg Easier

FFmpeg is a powerful tool for manipulating audiovisual files. Unfortunately, it also has a steep learning curve, especially for users unfamiliar with a command line interface. This app helps users through the command generation process so that more people can reap the benefits of FFmpeg.

Each button displays helpful information about how to perform a wide variety of tasks using FFmpeg. To use this site, click on the task you would like to perform. A new window will open up with a sample command and a description of how that command works. You can copy this command and understand how the command works with a breakdown of each of the flags.

Tutorials

For FFmpeg basics, check out the program’s official website.

For instructions on how to install FFmpeg on Mac, Linux, and Windows, refer to Reto Kromer’s installation instructions.

For Bash and command line basics, try the Command Line Crash Course. For a little more context presented in an ffmprovisr style, try explainshell.com!

License

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

Sister projects

Script Ahoy: Community Resource for Archivists and Librarians Scripting

The Sourcecaster: an app that helps you use the command line to work through common challenges that come up when working with digital primary sources.

Cable Bible: A Guide to Cables and Connectors Used for Audiovisual Tech

What do you want to do?

Select from the following.

Change formats

WAV to MP3

ffmpeg -i input_file.wav -write_id3v1 1 -id3v2_version 3 -dither_method modified_e_weighted -out_sample_rate 48k -qscale:a 1 output_file.mp3

This will convert your WAV files to MP3s.

- ffmpeg

- starts the command

- -i input_file

- path and name of the input file

- -write_id3v1 1

- Write ID3v1 tag. This will add metadata to the old MP3 format, assuming you’ve embedded metadata into the WAV file.

- -id3v2_version 3

- Write ID3v2 tag. This will add metadata to a newer MP3 format, assuming you’ve embedded metadata into the WAV file.

- -dither_method modified_e_weighted

- Dither makes sure you don’t unnecessarily truncate the dynamic range of your audio.

- -out_sample_rate 48k

- Sets the audio sampling frequency to 48 kHz. This can be omitted to use the same sampling frequency as the input.

- -qscale:a 1

- This sets the encoder to use a constant quality with a variable bitrate of between 190-250kbit/s. If you would prefer to use a constant bitrate, this could be replaced with

-b:a 320kto set to the maximum bitrate allowed by the MP3 format. For more detailed discussion on variable vs constant bitrates see here. - output_file

- path and name of the output file

WAV to AAC/MP4

ffmpeg -i input_file.wav -c:a aac -b:a 128k -dither_method modified_e_weighted -ar 44100 output_file.mp4

This will convert your WAV file to AAC/MP4.

- ffmpeg

- starts the command

- -i input_file

- path and name of the input file

- -c:a aac

- sets the audio codec to AAC

- -b:a 128k

- sets the bitrate of the audio to 128k

- -dither_method modified_e_weighted

- Dither makes sure you don’t unnecessarily truncate the dynamic range of your audio.

- -ar 44100

- sets the audio sampling frequency to 44100 Hz, or 44.1 kHz, or “CD quality”

- output_file

- path and name of the output file

Transcode into a deinterlaced Apple ProRes LT

ffmpeg -i input_file -c:v prores -profile:v 1 -vf yadif -c:a pcm_s16le output_file.mov

This command transcodes an input file into a deinterlaced Apple ProRes 422 LT file with 16-bit linear PCM encoded audio. The file is deinterlaced using the yadif filter (Yet Another De-Interlacing Filter).

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -c:v prores

- Tells ffmpeg to transcode the video stream into Apple ProRes 422

- -profile:v 1

- Declares profile of ProRes you want to use. The profiles are explained below:

- 0 = ProRes 422 (Proxy)

- 1 = ProRes 422 (LT)

- 2 = ProRes 422 (Standard)

- 3 = ProRes 422 (HQ)

- -vf yadif

- Runs a deinterlacing video filter (yet another deinterlacing filter) on the new file

- -c:a pcm_s16le

- Tells ffmpeg to encode the audio stream in 16-bit linear PCM

- output_file

- path, name and extension of the output file

The extension for the QuickTime container is.mov.

FFmpeg comes with more than one ProRes encoder:

proresis much faster, can be used for progressive video only, and seems to be better for video according to Rec. 601 (Recommendation ITU-R BT.601).prores_ksgenerates a better file, can also be used for interlaced video, allows also encoding of ProRes 4444 (-c:v prores_ks -profile:v 4), and seems to be better for video according to Rec. 709 (Recommendation ITU-R BT.709).

Transcode to H.264

ffmpeg -i input_file -c:v libx264 -pix_fmt yuv420p -c:a copy output_file

This command takes an input file and transcodes it to H.264 with an .mp4 wrapper, keeping the audio the same codec as the original. The libx264 codec defaults to a “medium” preset for compression quality and a CRF of 23. CRF stands for constant rate factor and determines the quality and file size of the resulting H.264 video. A low CRF means high quality and large file size; a high CRF means the opposite.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -c:v libx264

- tells ffmpeg to change the video codec of the file to H.264

- -pix_fmt yuv420p

- libx264 will use a chroma subsampling scheme that is the closest match to that of the input. This can result in Y′CBCR 4:2:0, 4:2:2, or 4:4:4 chroma subsampling. QuickTime and most other non-FFmpeg based players can’t decode H.264 files that are not 4:2:0. In order to allow the video to play in all players, you can specify 4:2:0 chroma subsampling.

- -c:a copy

- tells ffmpeg not to change the audio codec

- output_file

- path, name and extension of the output file

In order to use the same basic command to make a higher quality file, you can add some of these presets:

ffmpeg -i input_file -c:v libx264 -pix_fmt yuv420p -preset veryslow -crf 18 -c:a copy output_file

- -preset veryslow

- This option tells ffmpeg to use the slowest preset possible for the best compression quality.

- -crf 18

- Specifying a lower CRF will make a larger file with better visual quality. 18 is often considered a “visually lossless” compression.

H.264 from DCP

ffmpeg -i input_video_file.mxf -i input_audio_file.mxf -c:v libx264 -pix_fmt yuv420p -c:a aac output_file.mp4

This will transcode mxf wrapped video and audio files to an H.264 encoded .mp4 file. Please note this only works for unencrypted, single reel DCPs.

- ffmpeg

- starts the command

- -i input_video_file

- path and name of the video input file. This extension must be .mxf

- -i input_audio_file

- path and name of the audio input file. This extension must be .mxf

- -c:v libx264

- transcodes video to H.264

- -pix_fmt yuv420p

- sets pixel format to yuv420p for greater compatibility with media players

- -c:a aac

- re-encodes using the AAC audio codec

Note that sadly MP4 cannot contain sound encoded by a PCM (Pulse-Code Modulation) audio codec - output_file.mp4

- path, name and .mp4 extension of the output file

Variation: Copy PCM audio streams by using Matroska instead of the MP4 container

ffmpeg -i input_video_file.mxf -i input_audio_file.mxf -c:v libx264 -pix_fmt yuv420p -c:a copy output_file.mkv

- -c:a copy

- re-encodes using the same audio codec

- output_file.mkv

- path, name and .mkv extension of the output file

Upscaled, Pillar-boxed HD H.264 Access Files from SD NTSC source

ffmpeg -i input_file -c:v libx264 -filter:v "yadif,scale=1440:1080:flags=lanczos,pad=1920:1080:(ow-iw)/2:(oh-ih)/2,format=yuv420p" output_file

- ffmpeg

- Calls the program ffmpeg

- -i

- for input video file and audio file

- -c:v libx264

- encodes video stream with libx264 (h264)

- -filter:v

- calls an option to apply filtering to the video stream. yadif deinterlaces. scale and pad do the math! resizes the video frame then pads the area around the 4:3 aspect to complete 16:9. flags=lanczos uses the Lanczos scaling algorithm which is slower but better than the default bilinear. Finally, format specifies a pixel format of YUV 4:2:0. The very same scaling filter also downscales a bigger image size into HD.

- output_file

- path, name and extension of the output file

Transform 4:3 aspect ratio into 16:9 with pillarbox

Transform a video file with 4:3 aspect ratio into a video file with 16:9 aspect ration by correct pillarboxing.

ffmpeg -i input_file -filter:v "pad=ih*16/9:ih:(ow-iw)/2:(oh-ih)/2" -c:a copy output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -filter:v "pad=ih*16/9:ih:(ow-iw)/2:(oh-ih)/2"

- video padding

This resolution independent formula is actually padding any aspect ratio into 16:9 by pillarboxing, because the video filter uses relative values for input width (iw), input height (ih), output width (ow) and output height (oh). - -c:a copy

- re-encodes using the same audio codec

For silent videos you can replace-c:a copyby-an. - output_file

- path, name and extension of the output file

Transform SD into HD with pillarbox

Transform a SD video file with 4:3 aspect ratio into an HD video file with 16:9 aspect ratio by correct pillarboxing.

ffmpeg -i input_file -filter:v "colormatrix=bt601:bt709, scale=1440:1080:flags=lanczos, pad=1920:1080:240:0" -c:a copy output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -filter:v "colormatrix=bt601:bt709, scale=1440:1080:flags=lanczos, pad=1920:1080:240:0"

- set colour matrix, video scaling and padding

Three filters are applied:- The luma coefficients are modified from SD video (according to Rec. 601) to HD video (according to Rec. 709) by a colour matrix. Note that today Rec. 709 is often used also for SD and therefore you may cancel this parameter.

- The scaling filter (

scale=1440:1080) works for both upscaling and downscaling. We use the Lanczos scaling algorithm (flags=lanczos), which is slower but gives better results than the default bilinear algorithm. - The padding filter (

pad=1920:1080:240:0) completes the transformation from SD to HD.

- -c:a copy

- re-encodes using the same audio codec

For silent videos you can replace-c:a copywith-an. - output_file

- path, name and extension of the output file

Transform 16:9 aspect ratio video into 4:3 with letterbox

Transform a video file with 16:9 aspect ratio into a video file with 4:3 aspect ration by correct letterboxing.

ffmpeg -i input_file -filter:v "pad=iw:iw*3/4:(ow-iw)/2:(oh-ih)/2" -c:a copy output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -filter:v "pad=iw:iw*3/4:(ow-iw)/2:(oh-ih)/2"

- video padding

This resolution independent formula is actually padding any aspect ratio into 4:3 by letterboxing, because the video filter uses relative values for input width (iw), input height (ih), output width (ow) and output height (oh). - -c:a copy

- re-encodes using the same audio codec

For silent videos you can replace-c:a copyby-an. - output_file

- path, name and extension of the output file

Create FFV1 Version 3 video in a Matroska container with framemd5 of input

ffmpeg -i input_file -map 0 -dn -c:v ffv1 -level 3 -g 1 -slicecrc 1 -slices 16 -c:a copy output_file.mkv -f framemd5 -an md5_output_file

This will losslessly trancode your video with the FFV1 Version 3 codec in a Matroska container. In order to verify losslessness, a framemd5 of the source video is also generated. For more information on FFV1 encoding, try the ffmpeg wiki.

- ffmpeg

- starts the command.

- -i input_file

- path, name and extension of the input file.

- -map 0

- Map all streams that are present in the input file. This is important as ffmpeg will map only one stream of each type (video, audio, subtitles) by default to the output video.

- -dn

- ignore data streams (data no). The Matroska container does not allow data tracks.

- -c:v ffv1

- specifies the FFV1 video codec.

- -level 3

- specifies Version 3 of the FFV1 codec.

- -g 1

- specifies intra-frame encoding, or GOP=1.

- -slicecrc 1

- Adds CRC information for each slice. This makes it possible for a decoder to detect errors in the bitstream, rather than blindly decoding a broken slice.

- -slices 16

- Each frame is split into 16 slices. 16 is a good trade-off between filesize and encoding time. [more]

- -c:a copy

- copies all mapped audio streams.

- output_file.mkv

- path and name of the output file. Use the

.mkvextension to save your file in a Matroska container. Optionally, choose a different extension if you want a different container, such as.movor.avi. - -f framemd5

- Decodes video with the framemd5 muxer in order to generate md5 checksums for every frame of your input file. This allows you to verify losslessness when compared against the framemd5s of the output file.

- -an

- ignores the audio stream when creating framemd5 (audio no)

- framemd5_output_file

- path, name and extension of the framemd5 file.

Change Display Aspect Ratio without reencoding video

ffmpeg -i input_file -c:v copy -aspect 4:3 output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -c:v copy

- Copy all mapped video streams.

- -aspect 4:3

- Change Display Aspect Ratio to

4:3. Experiment with other aspect ratios such as16:9. If used together with-c:v copy, it will affect the aspect ratio stored at container level, but not the aspect ratio stored in encoded frames, if it exists. - output_file

- path, name and extension of the output file

MKV to MP4

ffmpeg -i input_file.mkv -c:v copy -c:a aac output_file.mp4

This will convert your Matroska (MKV) files to MP4 files.

- ffmpeg

- starts the command

- -i input_file

- path and name of the input file

The extension for the Matroska container is.mkv. - -c:v copy

- re-encodes using the same video codec

- -c:a aac

- re-encodes using the AAC audio codec

Note that sadly MP4 cannot contain sound encoded by a PCM (Pulse-Code Modulation) audio codec.

For silent videos you can replace-c:a aacby-an. - output_file

- path and name of the output file

The extension for the MP4 container is.mp4.

Images to GIF

ffmpeg -f image2 -framerate 9 -pattern_type glob -i "input_image_*.jpg" -vf scale=250x250 output_file.gif

This will convert a series of image files into a gif.

- ffmpeg

- starts the command

- -f image2

- forces input or output file format.

image2specifies the image file demuxer. - -framerate 9

- sets framerate to 9 frames per second

- -pattern_type glob

- tells ffmpeg that the following mapping should "interpret like a glob" (a "global command" function that relies on the * as a wildcard and finds everything that matches)

- -i "input_image_*.jpg"

- maps all files in the directory that start with input_image_, for example input_image_001.jpg, input_image_002.jpg, input_image_003.jpg... etc.

(The quotation marks are necessary for the above “glob” pattern!) - -vf scale=250x250

- filter the video to scale it to 250x250; -vf is an alias for -filter:v

- output_file.gif

- path and name of the output file

Convert DVD to H.264

ffmpeg -i concat:input_file1\|input_file2\|input_file3 -c:v libx264 -c:a copy output_file.mp4

This command allows you to create an H.264 file from a DVD source that is not copy-protected.

Before encoding, you’ll need to establish which of the .VOB files on the DVD or .iso contain the content that you wish to encode. Inside the VIDEO_TS directory, you will see a series of files with names like VTS_01_0.VOB, VTS_01_1.VOB, etc. Some of the .VOB files will contain menus, special features, etc, so locate the ones that contain target content by playing them back in VLC.

- ffmpeg

- starts the command

- -i concat:input files

- lists the input VOB files and directs ffmpeg to concatenate them. Each input file should be separated by a backslash and a pipe, like so:

-i concat:VTS_01_1.VOB\|VTS_01_2.VOB\|VTS_01_3.VOB

The backslash is simply an escape character for the pipe (|). - -c:v libx264

- sets the video codec as H.264

- -c:a copy

- audio remains as-is (no re-encode)

- output_file.mp4

- path and name of the output file

It’s also possible to adjust the quality of your output by setting the -crf and -preset values:

ffmpeg -i concat:input_file1\|input_file2\|input_file3 -c:v libx264 -crf 18 -preset veryslow -c:a copy output_file.mp4

- -crf 18

- sets the constant rate factor to a visually lossless value. Libx264 defaults to a crf of 23, considered medium quality; a smaller crf value produces a larger and higher quality video.

- -preset veryslow

- A slower preset will result in better compression and therefore a higher-quality file. The default is medium; slower presets are slow, slower, and veryslow.

Bear in mind that by default, libx264 will only encode a single video stream and a single audio stream, picking the ‘best’ of the options available. To preserve all video and audio streams, add -map parameters:

ffmpeg -i concat:input_file1\|input_file2 -map 0:v -map 0:a -c:v libx264 -c:a copy output_file.mp4

- -map 0:v

- encodes all video streams

- -map 0:a

- encodes all audio streams

Transcode to H.265/HEVC

ffmpeg -i input_file -c:v libx265 -pix_fmt yuv420p -c:a copy output_file

This command takes an input file and transcodes it to H.265/HEVC in an .mp4 wrapper, keeping the audio codec the same as in the original file.

Note: ffmpeg must be compiled with libx265, the library of the H.265 codec, for this script to work. (Add the flag --with-x265 if using brew install ffmpeg method).

- ffmpeg

- starts the command

- -i input file

- path, name and extension of the input file

- -c:v libx265

- tells ffmpeg to encode the video as H.265

- -pix_fmt yuv420p

- libx265 will use a chroma subsampling scheme that is the closest match to that of the input. This can result in YUV 4:2:0, 4:2:2, or 4:4:4 chroma subsampling. For widest accessibility, it’s a good idea to specify 4:2:0 chroma subsampling.

- -c:a copy

- tells ffmpeg not to change the audio codec

- output file

- path, name and extension of the output file

The libx265 encoding library defaults to a ‘medium’ preset for compression quality and a CRF of 28. CRF stands for ‘constant rate factor’ and determines the quality and file size of the resulting H.265 video. The CRF scale ranges from 0 (best quality [lossless]; largest file size) to 51 (worst quality; smallest file size).

A CRF of 28 for H.265 can be considered a medium setting, corresponding to a CRF of 23 in encoding H.264, but should result in about half the file size.

To create a higher quality file, you can add these presets:

ffmpeg -i input_file -c:v libx265 -pix_fmt yuv420p -preset veryslow -crf 18 -c:a copy output_file

- -preset veryslow

- This option tells ffmpeg to use the slowest preset possible for the best compression quality.

- -crf 18

- Specifying a lower CRF will make a larger file with better visual quality. 18 is often considered a ‘visually lossless’ compression.

Deinterlace a video

ffmpeg -i input_file -c:v libx264 -vf "yadif,format=yuv420p" output_file

This command takes an interlaced input file and outputs a deinterlaced H.264 MP4.

- ffmpeg

- starts the command

- -i input file

- path, name and extension of the input file

- -c:v libx264

- tells ffmpeg to encode the video stream as H.264

- -vf

- video filtering will be used (

-vfis an alias of-filter:v) - "

- start of filtergraph (see below)

- yadif

- deinterlacing filter (‘yet another deinterlacing filter’)

By default, yadif will output one frame for each frame. Outputting one frame for each field (thereby doubling the frame rate) withyadif=1may produce visually better results. - ,

- separates filters

- format=yuv420p

- chroma subsampling set to 4:2:0

By default,libx264will use a chroma subsampling scheme that is the closest match to that of the input. This can result in Y′CBCR 4:2:0, 4:2:2, or 4:4:4 chroma subsampling. QuickTime and most other non-FFmpeg based players can’t decode H.264 files that are not 4:2:0, therefore it’s advisable to specify 4:2:0 chroma subsampling. - "

- end of filtergraph

- output file

- path, name and extension of the output file

"yadif,format=yuv420p" is an ffmpeg filtergraph. Here the filtergraph is made up of one filter chain, which is itself made up of the two filters (separated by the comma).

The enclosing quote marks are necessary when you use spaces within the filtergraph, e.g. -vf "yadif, format=yuv420p", and are included above as an example of good practice.

Note: ffmpeg includes several deinterlacers apart from yadif: bwdif, w3fdif, kerndeint, and nnedi.

For more H.264 encoding options, see the latter section of the encode H.264 command.

Example

Before and after deinterlacing:

Transcode video to a different colourspace

This command uses a filter to convert the video to a different colour space.

ffmpeg -i input_file -c:v libx264 -vf colormatrix=src:dst output_file

- ffmpeg

- starts the command

- -i input file

- path, name and extension of the input file

- -c:v libx264

- tells ffmpeg to encode the video stream as H.264

- -vf colormatrix=src:dst

- the video filter colormatrix will be applied, with the given source and destination colourspaces.

Accepted values includebt601(Rec.601),smpte170m(Rec.601, 525-line/NTSC version),bt470bg(Rec.601, 625-line/PAL version),bt709(Rec.709), andbt2020(Rec.2020).

For example, to convert from Rec.601 to Rec.709, you would use-vf colormatrix=bt601:bt709. - output file

- path, name and extension of the output file

Note: Converting between colourspaces with ffmpeg can be done via either the colormatrix or colorspace filters, with colorspace allowing finer control (individual setting of colourspace, transfer characteristics, primaries, range, pixel format, etc). See this entry on the ffmpeg wiki, and the ffmpeg documentation for colormatrix and colorspace.

Convert colourspace and embed colourspace metadata

ffmpeg -i input_file -c:v libx264 -vf colormatrix=src:dst -color_primaries val -color_trc val -colorspace val output_file

- ffmpeg

- starts the command

- -i input file

- path, name and extension of the input file

- -c:v libx264

- encode video as H.264

- -vf colormatrix=src:dst

- the video filter colormatrix will be applied, with the given source and destination colourspaces.

- -color_primaries val

- tags video with the given colour primaries.

Accepted values includesmpte170m(Rec.601, 525-line/NTSC version),bt470bg(Rec.601, 625-line/PAL version),bt709(Rec.709), andbt2020(Rec.2020). - -color_trc val

- tags video with the given transfer characteristics (gamma).

Accepted values includesmpte170m(Rec.601, 525-line/NTSC version),gamma28(Rec.601, 625-line/PAL version)1,bt709(Rec.709),bt2020_10(Rec.2020 10-bit), andbt2020_12(Rec.2020 12-bit). - -colorspace val

- tags video as being in the given colourspace.

Accepted values includesmpte170m(Rec.601, 525-line/NTSC version),bt470bg(Rec.601, 625-line/PAL version),bt709(Rec.709),bt2020_cl(Rec.2020 constant luminance), andbt2020_ncl(Rec.2020 non-constant luminance). - output file

- path, name and extension of the output file

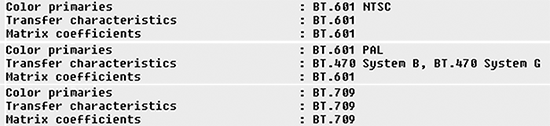

Examples

To Rec.601 (525-line/NTSC):

ffmpeg -i input_file -c:v libx264 -vf colormatrix=bt709:smpte170m -color_primaries smpte170m -color_trc smpte170m -colorspace smpte170m output_file

To Rec.601 (625-line/PAL):

ffmpeg -i input_file -c:v libx264 -vf colormatrix=bt709:bt470bg -color_primaries bt470bg -color_trc gamma28 -colorspace bt470bg output_file

To Rec.709:

ffmpeg -i input_file -c:v libx264 -vf colormatrix=bt601:bt709 -color_primaries bt709 -color_trc bt709 -colorspace bt709 output_file

MediaInfo output examples:

⚠ Using this command it is possible to add Rec.709 tags to a file that is actually Rec.601 (etc), so apply with caution!

These commands are relevant for H.264 and H.265 videos, encoded with libx264 and libx265 respectively.

Note: If you wish to embed colourspace metadata without changing to another colourspace, omit -vf colormatrix=src:dst. However, since it is libx264/libx265 that writes the metadata, it’s not possible to add these tags without reencoding the video stream.

For all possible values for -color_primaries, -color_trc, and -colorspace, see the ffmpeg documentation on codec options.

1. Out of step with the regular pattern, -color_trc doesn't accept bt470bg; it is instead here referred to directly as gamma.

In the Rec.601 standard, 525-line/NTSC and 625-line/PAL video have assumed gammas of 2.2 and 2.8 respectively. ↩

Inverse telecine a video file

ffmpeg -i input_file -c:v libx264 -vf "fieldmatch,yadif,decimate" output_file

The inverse telecine procedure reverses the 3:2 pull down process, restoring 29.97fps interlaced video to the 24fps frame rate of the original film source.

- ffmpeg

- starts the command

- -i input file

- path, name and extension of the input file

- -c:v libx264

- encode video as H.264

- -vf "fieldmatch,yadif,decimate"

- applies these three video filters to the input video.

Fieldmatch is a field matching filter for inverse telecine - it reconstructs the progressive frames from a telecined stream.

Yadif (‘yet another deinterlacing filter’) deinterlaces the video. (Note that ffmpeg also includes several other deinterlacers).

Decimate deletes duplicated frames. - output file

- path, name and extension of the output file

"fieldmatch,yadif,decimate" is an ffmpeg filtergraph. Here the filtergraph is made up of one filter chain, which is itself made up of the three filters (separated by commas).

The enclosing quote marks are necessary when you use spaces within the filtergraph, e.g. -vf "fieldmatch, yadif, decimate", and are included above as an example of good practice.

Note that if applying an inverse telecine procedure to a 29.97i file, the output framerate will actually be 23.976fps.

This command can also be used to restore other framerates.

Example

Before and after inverse telecine:

Filters

Plays a graphical output showing decibel levels of an input file

ffplay -f lavfi "amovie='input.mp3',astats=metadata=1:reset=1,adrawgraph=lavfi.astats.Overall.Peak_level:max=0:min=-30.0:size=700x256:bg=Black[out]"

- ffplay

- starts the command

- -f lavfi

- tells ffmpeg to use the Libavfilter input virtual device

- "

- quotation mark to start command

- movie='input.mp3'

- declares audio source file on which to apply filter

- ,

- comma signifies the end of audio source section and the beginning of the filter section

- astats=metadata=1

- tells the astats filter to ouput metadata that can be passed to another filter (in this case adrawgraph)

- :

- divides beteen options of the same filter

- reset=1

- tells the filter to calculate the stats on every frame (increasing this number would calculate stats for groups of frames)

- ,

- comma divides one filter in the chain from another

- adrawgraph=lavfi.astats.Overall.Peak_level:max=0:min=-30.0

- draws a graph using the overall peak volume calculated by the astats filter. It sets the max for the graph to 0 (dB) and the minimum to -30 (dB). For more options on data points that can be graphed see the ffmpeg astats documentation

- size=700x256:bg=Black

- sets the background color and size of the output

- [out]

- ends the filterchain and sets the output

- "

- quotation mark to close command

Example of filter output

Shows all pixels outside of broadcast range

ffplay -f lavfi "movie='input.mp4',signalstats=out=brng:color=cyan[out]"

- ffplay

- starts the command

- -f lavfi

- tells ffmpeg to use the Libavfilter input virtual device

- "

- quotation mark to start command

- movie='input.mp4'

- declares video file source to apply filter

- ,

- comma signifies closing of video source assertion and ready for filter assertion

- signalstats=out=brng:

- tells ffplay to use the signalstats command, output the data, use the brng filter

- :

- indicates there’s another parameter coming

- color=cyan[out]

- sets the color of out-of-range pixels to cyan

- "

- quotation mark to close command

Example of filter output

Plays video with OCR on top

Note: ffmpeg must be compiled with the tesseract library for this script to work (--with-tesseract if using brew install ffmpeg method).

ffplay input_file -vf "ocr,drawtext=fontfile=/Library/Fonts/Andale Mono.ttf:text=%{metadata\\\:lavfi.ocr.text}:fontcolor=white"

- ffplay

- starts the command

- input_file

- path, name and extension of the input file

- -vf

- creates a filtergraph to use for the streams

- "

- quotation mark to start filter command

- ocr,

- tells ffplay to use ocr as source and the comma signifies that the script is ready for filter assertion

- drawtext=fontfile=/Library/Fonts/Andale Mono.ttf

- tells ffplay to drawtext and use a specific font (Andale Mono) when doing so

- :

- indicates there’s another parameter coming

- text=%{metadata\\\:lavfi.ocr.text}

- tells ffplay what text to use when playing. In this case, calls for metadata that lives in the lavfi.ocr.text library

- :

- indicates there’s another parameter coming

- fontcolor=white

- specifies font color as white

- "

- quotation mark to close filter command

Exports OCR data to screen

Note: ffmpeg must be compiled with the tesseract library for this script to work (--with-tesseract if using brew install ffmpeg method)

ffprobe -show_entries frame_tags=lavfi.ocr.text -f lavfi -i "movie=input_file,ocr"

- ffprobe

- starts the command

- -show_entries

- sets a list of entries to show

- frame_tags=lavfi.ocr.text

- shows the lavfi.ocr.text tag in the frame section of the video

- -f lavfi

- tells ffmpeg to use the Libavfilter input virtual device

- -i "movie=input_file,ocr"

- declares 'movie' as input_file and passes in the 'ocr' command

Plays vectorscope of video

ffplay input_file -vf "split=2[m][v],[v]vectorscope=b=0.7:m=color3:g=green[v],[m][v]overlay=x=W-w:y=H-h"

- ffplay

- starts the command

- input_file

- path, name and extension of the input file

- -vf

- creates a filtergraph to use for the streams

- "

- quotation mark to start command

- split=2[m][v]

- Splits the input into two identical outputs and names them [m] and [v]

- ,

- comma signifies there is another parameter coming

- [v]vectorscope=b=0.7:m=color3:g=green[v]

- asserts usage of the vectorscope filter and sets a light background opacity (b, alias for bgopacity), sets a background color style (m, alias for mode), and graticule color (g, alias for graticule)

- ,

- comma signifies there is another parameter coming

- [m][v]overlay=x=W-w:y=H-h

- declares where the vectorscope will overlay on top of the video image as it plays

- "

- quotation mark to end command

This will play two input videos side by side while also applying the temporal difference filter to them

ffmpeg -i input01 -i input02 -filter_complex "[0:v:0]tblend=all_mode=difference128[a];[1:v:0]tblend=all_mode=difference128[b];[a][b]hstack[out]" -map [out] -f nut -c:v rawvideo - | ffplay -

- ffmpeg

- starts the command

- -i input01 -i input02

- Designates the files to use for inputs one and two respectively

- -filter_complex

- Lets ffmpeg know we will be using a complex filter (this must be used for multiple inputs)

- [0:v:0]tblend=all_mode=difference128[a]

- Applies the tblend filter (with the settings all_mode and difference128) to the first video stream from the first input and assigns the result to the output [a]

- [1:v:0]tblend=all_mode=difference128[a]

- Applies the tblend filter (with the settings all_mode and difference128) to the first video stream from the second input and assigns the result to the output [b]

- [a][b]hstack[out]

- Takes the outputs from the previous steps ([a] and [b] and uses the hstack (horizontal stack) filter on them to create the side by side output. This output is then named [out])

- -map [out]

- Maps the output of the filter chain

- -f nut

- Sets the format for the output video stream to Nut

- -c:v rawvideo

- Sets the video codec of the output video stream to raw video

- -

- Tells ffmpeg that the output will be piped to a new command (as opposed to a file)

- |

- Tells the system you will be piping the output of the previous command into a new command

- ffplay -

- Starts ffplay and tells it to use the pipe from the previous command as its input

Example of filter output

Make derivative variations

Create GIF

Create high quality GIF

ffmpeg -ss HH:MM:SS -i input_file -filter_complex "fps=10,scale=500:-1:flags=lanczos,palettegen" -t 3 palette.png

ffmpeg -ss HH:MM:SS -i input_file -i palette.png -filter_complex "[0:v]fps=10,scale=500:-1:flags=lanczos[v],[v][1:v]paletteuse" -t 3 -loop 6 output_file

The first command will use the palettegen filter to create a custom palette, then the second command will create the GIF with the paletteuse filter. The result is a high quality GIF.

- ffmpeg

- starts the command

- -ss HH:MM:SS

- starting point of the gif. If a plain numerical value is used it will be interpreted as seconds

- -i input_file

- path, name and extension of the input file

- -filter_complex "fps=frame rate,scale=width:height,palettegen"

- a complex filtergraph using the fps filter to set frame rate, the scale filter to resize, and the palettegen filter to generate the palette. The scale value of -1 preserves the aspect ratio

- -t 3

- duration in seconds (here 3; can be specified also with a full timestamp, i.e. here 00:00:03)

- -loop 6

- number of times to loop the gif. A value of -1 will disable looping. Omitting -loop will use the default which will loop infinitely

- output_file

- path, name and extension of the output file

Simpler GIF creation

ffmpeg -ss HH:MM:SS -i input_file -vf "fps=10,scale=500:-1" -t 3 -loop 6 output_file

This is a quick and easy method. Dithering is more apparent than the above method using the palette* filters, but the file size will be smaller. Perfect for that “legacy” GIF look.

One thumbnail

ffmpeg -i input_file -ss 00:00:20 -vframes 1 thumb.png

This command will grab a thumbnail 20 seconds into the video.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -ss 00:00:20

- seeks video file to 20 seconds into the video

- -vframes 1

- sets the number of frames (in this example, one frame)

- output file

- path, name and extension of the output file

Many thumbnails

ffmpeg -i input_file -vf fps=1/60 out%d.png

This will grab a thumbnail every minute and output sequential png files.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -ss 00:00:20

- seeks video file to 20 seconds into the video

- -vf fps=1/60

- Creates a filtergraph to use for the streams. The rest of the command identifies filtering by frames per second, and sets the frames per second at 1/60 (which is one per minute). Omitting this will output all frames from the video.

- output file

- path, name and extension of the output file. In the example out%d.png where %d is a regular expression that adds a number (d is for digit) and increments with each frame (out1.png, out2.png, out3.png…). You may also chose a regular expression like out%04d.png which gives 4 digits with leading 0 (out0001.png, out0002.png, out0003.png, …).

Excerpt from beginning

ffmpeg -i input_file -t 5 -c copy output_file

This command captures a certain portion of a video file, starting from the beginning and continuing for the amount of time (in seconds) specified in the script. This can be used to create a preview file, or to remove unwanted content from the end of the file. To be more specific, use timecode, such as 00:00:05.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -t 5

- Tells ffmpeg to stop copying from the input file after a certain time, and specifies the number of seconds after which to stop copying. In this case, 5 seconds is specified.

- -c copy

- use stream copy mode to re-mux instead of re-encode

- output_file

- path, name and extension of the output file

Trim a video without re-encoding

ffmpeg -i input_file -ss 00:02:00 -to 00:55:00 -c copy output_file

This command allows you to create an excerpt from a video file without re-encoding the image data.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -ss 00:02:00

- sets in point at 00:02:00

- -to 00:55:00

- sets out point at 00:55:00

- -c copy

- use stream copy mode (no re-encoding)

Note: watch out when using-sswith-c copyif the source is encoded with an interframe codec (e.g., H.264). Since ffmpeg must split on i-frames, it will seek to the nearest i-frame to begin the stream copy. - output_file

- path, name and extension of the output file

Variation: trim video by setting duration, by using -t instead of -to

ffmpeg -i input_file -ss 00:05:00 -t 10 -c copy output_file

- -ss 00:05:00 -t 10

- Beginning five minutes into the original video, this command will create a 10-second-long excerpt.

Note: In order to keep the original timestamps, without trying to sanitise them, you may add the -copyts option.

Excerpt to end

ffmpeg -i input_file -ss 5 -c copy output_file

This command copies a video file starting from a specified time, removing the first few seconds from the output. This can be used to create an excerpt, or remove unwanted content from the beginning of a video file.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -ss 5

- Tells ffmpeg what timecode in the file to look for to start copying, and specifies the number of seconds into the video that ffmpeg should start copying. To be more specific, you can use timecode such as 00:00:05.

- -c copy

- use stream copy mode to re-mux instead of re-encode

- output_file

- path, name and extension of the output file

Excerpt from end

ffmpeg -sseof -5 -i input_file -c copy output_file

This command copies a video file starting from a specified time before the end of the file, removing everything before from the output. This can be used to create an excerpt, or extract content from the end of a video file (e.g. for extracting the closing credits).

- ffmpeg

- starts the command

- -sseof -5

- This parameter must stay before the input file. It tells ffmpeg what timecode in the file to look for to start copying, and specifies the number of seconds from the end of the video that ffmpeg should start copying. The end of the file has index 0 and the minus sign is needed to reference earlier portions. To be more specific, you can use timecode such as -00:00:05. Note that in most file formats it is not possible to seek exactly, so ffmpeg will seek to the closest point before.

- -i input_file

- path, name and extension of the input file

- -c copy

- use stream copy mode to re-mux instead of re-encode

- output_file

- path, name and extension of the output file

Create ISO files for DVD access

Create an ISO file that can be used to burn a DVD. Please note, you will have to install dvdauthor. To install dvd author using Homebrew run: brew install dvdauthor

ffmpeg -i input_file -aspect 4:3 -target ntsc-dvd output_file.mpg

This command will take any file and create an MPEG file that dvdauthor can use to create an ISO.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -aspect 4:3

- declares the aspect ratio of the resulting video file. You can also use 16:9.

- -target ntsc-dvd

- specifies the region for your DVD. This could be also pal-dvd.

- output_file.mpg

- path and name of the output file. The extension must be .mpg

Cover head switching noise

ffmpeg -i input_file -filter:v drawbox=w=iw:h=7:y=ih-h:t=max output_file

This command will draw a black box over a small area of the bottom of the frame, which can be used to cover up head switching noise.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -filter:v drawbox=

- This calls the drawtext filter with the following options:

- w=in_w

- Width is set to the input width. Shorthand for this command would be w=iw

- h=7

- Height is set to 7 pixels.

- y=ih-h

- Y represents the offset, and ih-h sets it to the input height minus the height declared in the previous parameter, setting the box at the bottom of the frame.

- t=max

- T represents the thickness of the drawn box. Default is 3.

- output_file

- path and name of the output file

Generate two access MP3s from input. One with appended audio (such as copyright notice) and one unmodified.

ffmpeg -i input_file -i input_file_to_append -filter_complex "[0:a:0]asplit=2[a][b];[b]afifo[bb];[1:a:0][bb]concat=n=2:v=0:a=1[concatout]" -map "[a]" -codec:a libmp3lame -dither_method modified_e_weighted -qscale:a 2 output_file.mp3 -map "[concatout]" -codec:a libmp3lame -dither_method modified_e_weighted -qscale:a 2 output_file_appended.mp3

This script allows you to generate two derivative audio files from a master while appending audio from a seperate file (for example a copyright or institutional notice) to one of them.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file (the master file)

- -i input_file_to_append

- path, name and extension of the input file (the file to be appended to access file)

- -filter_complex

- enables the complex filtering to manage splitting the input to two audio streams

- [0:a:0]asplit=2[a][b];

asplitallows audio streams to be split up for seperate manipulation. This command splits the audio from the first input (the master file) into two streams "a" and "b"- [b]afifo[bb];

- this buffers the stream "b" to help prevent dropped samples and renames stream to "bb"

- [1:a:0][bb]concat=n=2:v=0:a=1[concatout]

concatis used to join files.n=2tells the filter there are two inputs.v=0:a=1Tells the filter there are 0 video outputs and 1 audio output. This command appends the audio from the second input to the beginning of stream "bb" and names the output "concatout"- -map "[a]"

- this maps the unmodified audio stream to the first output

- -codec:a libmp3lame -dither_method modified_e_weighted -qscale:a 2

- sets up mp3 options (using constant quality)

- output_file

- path, name and extension of the output file (unmodified)

- -map "[concatout]"

- this maps the modified stream to the second output

- -codec:a libmp3lame -dither_method modified_e_weighted -qscale:a 2

- sets up mp3 options (using constant quality)

- output_file_appended

- path, name and extension of the output file (with appended notice)

Preservation

Create Bash script to batch process with ffmpeg

Bash scripts are plain text files saved with a .sh extension. This entry explains how they work with the example of a bash script named “Rewrap-MXF.sh”, which rewraps .MXF files in a given directory to .MOV files.

“Rewrap-MXF.sh” contains the following text:

for file in *.MXF; do ffmpeg -i "$file" -map 0 -c copy "${file%.MXF}.mov"; done

- for file in *.MXF

- starts the loop, and states what the input files will be. Here, the ffmpeg command within the loop will be applied to all files with an extension of .MXF.

The word ‘file’ is an arbitrary variable which will represent each .MXF file in turn as it is looped over. - do ffmpeg -i "$file"

- carry out the following ffmpeg command for each input file.

Per Bash syntax, within the command the variable is referred to by “$file”. The dollar sign is used to reference the variable ‘file’, and the enclosing quotation marks prevents reinterpretation of any special characters that may occur within the filename, ensuring that the original filename is retained. - -map 0

- retain all streams

- -c copy

- enable stream copy (no re-encode)

- "${file%.MXF}.mov";

- retaining the original file name, set the output file wrapper as .mov

- done

- complete; all items have been processed.

Note: the shell script (.sh file) and all .MXF files to be processed must be contained within the same directory, and the script must be run from that directory.

Execute the .sh file with the command sh Rewrap-MXF.sh.

Modify the script as needed to perform different transcodes, or to use with ffprobe. :)

The basic pattern will look similar to this:

for item in *.ext; do ffmpeg -i $item (ffmpeg options here) "${item%.ext}_suffix.ext"

e.g., if an input file is bestmovie002.avi, its output will be bestmovie002_suffix.avi.

Create PowerShell script to batch process with ffmpeg

As of Windows 10, it is possible to run Bash via Bash on Ubuntu on Windows, allowing you to use bash scripting. To enable Bash on Windows, see these instructions.

On Windows, the primary native command line programme is PowerShell. PowerShell scripts are plain text files saved with a .ps1 extension. This entry explains how they work with the example of a PowerShell script named “rewrap-mp4.ps1”, which rewraps .mp4 files in a given directory to .mkv files.

“rewrap-mp4.ps1” contains the following text:

$inputfiles = ls *.mp4

foreach ($file in $inputfiles) {

$output = [io.path]::ChangeExtension($file, '.mkv')

ffmpeg -i $file -map 0 -c copy $output

}- $inputfiles = ls *.mp4

- Creates the variable

$inputfiles, which is a list of all the .mp4 files in the current folder.

In PowerShell, all variable names start with the dollar-sign character. - foreach ($file in $inputfiles)

- Creates a loop and states the subsequent code block will be applied to each file listed in

$inputfiles.

$fileis an arbitrary variable which will represent each .mp4 file in turn as it is looped over. - {

- Opens the code block.

- $output = [io.path]::ChangeExtension($file, '.mkv')

- Sets up the output file: it will be located in the current folder and keep the same filename, but will have an .mkv extension instead of .mp4.

- ffmpeg -i $file

- Carry out the following ffmpeg command for each input file.

Note: To call ffmpeg here as just ‘ffmpeg’ (rather than entering the full path to ffmpeg.exe), you must make sure that it’s correctly configured. See this article, especially the section ‘Add to Path’. - -map 0

- retain all streams

- -c copy

- enable stream copy (no re-encode)

- $output

- The output file is set to the value of the

$outputvariable declared above: i.e., the current file name with an .mkv extension. - }

- Closes the code block.

Note: the PowerShell script (.ps1 file) and all .mp4 files to be rewrapped must be contained within the same directory, and the script must be run from that directory.

Execute the .ps1 file by typing .\rewrap-mp4.ps1 in PowerShell.

Modify the script as needed to perform different transcodes, or to use with ffprobe. :)

Create MD5 checksums

ffmpeg -i input_file -f framemd5 -an output_file

This will create an MD5 checksum per video frame.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -f framemd5

- library used to calculate the MD5 checksums

- -an

- ignores the audio stream (audio no)

- output_file

- path, name and extension of the output file

You may verify an MD5 checksum file created this way by using a Bash script.

Pull specs from video file

ffprobe -i input_file -show_format -show_streams -show_data -print_format xml

This command extracts technical metadata from a video file and displays it in xml.

ffmpeg documentation on ffprobe (full list of flags, commands, www.ffmpeg.org/ffprobe.html)

- ffprobe

- starts the command

- -i input_file

- path, name and extension of the input file

- -show_format

- outputs file container informations

- -show_streams

- outputs audio and video codec informations

- -show_data

- adds a short “hexdump” to show_streams command output

- -print_format

- Set the output printing format (in this example “xml”; other formats include “json” and “flat”)

Check FFV1 Version 3 fixity

ffmpeg -report -i input_file -f null -

This decodes your video and displays any CRC checksum mismatches. These errors will display in your terminal like this: [ffv1 @ 0x1b04660] CRC mismatch 350FBD8A!at 0.272000 seconds

Frame crcs are enabled by default in FFV1 Version 3.

- ffmpeg

- starts the command

- -report

- Dump full command line and console output to a file named ffmpeg-YYYYMMDD-HHMMSS.log in the current directory. It also implies

-loglevel verbose. - -i input_file

- path, name and extension of the input file

- -f null

- Video is decoded with the

nullmuxer. This allows video decoding without creating an output file. - -

- FFmpeg syntax requires a specified output, and

-is just a place holder. No file is actually created.

Check video file interlacement patterns

ffmpeg -i input file -filter:v idet -f null -

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -filter:v idet

- This calls the idet (detect video interlacing type) filter.

- -f null

- Video is decoded with the

nullmuxer. This allows video decoding without creating an output file. - -

- FFmpeg syntax requires a specified output, and

-is just a place holder. No file is actually created.

Creates a QCTools report

ffprobe -f lavfi -i "movie=input_file:s=v+a[in0][in1],[in0]signalstats=stat=tout+vrep+brng,cropdetect=reset=1:round=1,idet=half_life=1,split[a][b];[a]field=top[a1];[b]field=bottom,split[b1][b2];[a1][b1]psnr[c1];[c1][b2]ssim[out0];[in1]ebur128=metadata=1,astats=metadata=1:reset=1:length=0.4[out1]" -show_frames -show_versions -of xml=x=1:q=1 -noprivate | gzip > input_file.qctools.xml.gz

This will create an XML report for use in QCTools for a video file with one video track and one audio track. See also the QCTools documentation.

- ffprobe

- starts the command

- -f lavfi

- tells ffprobe to use the Libavfilter input virtual device [more]

- -i

- input file and parameters

- "movie=input_file:s=v+a[in0][in1],[in0]signalstats=stat=tout+vrep+brng,cropdetect=reset=1:round=1,idet=half_life=1,split[a][b];[a]field=top[a1];[b]field=bottom,split[b1][b2];[a1][b1]psnr[c1];[c1][b2]ssim[out0];[in1]ebur128=metadata=1,astats=metadata=1:reset=1:length=0.4[out1]"

- This very large lump of commands declares the input file and passes in a request for all potential data signal information for a file with one video and one audio track

- -show_frames

- asks for information about each frame and subtitle contained in the input multimedia stream

- -show_versions

- asks for information related to program and library versions

- -of xml=x=1:q=1

- sets the data export format to XML

- -noprivate

- hides any private data that might exist in the file

- | gzip

- The | is to "pipe" (or push) the data into a compressed file format

>- redirects the standard output (the data made by ffprobe about the video)

- input_file.qctools.xml.gz

- names the zipped data output file, which can be named anything but needs the extension qctools.xml.gz for compatibility issues

Creates a QCTools report

ffprobe -f lavfi -i "movie=input_file,signalstats=stat=tout+vrep+brng,cropdetect=reset=1:round=1,idet=half_life=1,split[a][b];[a]field=top[a1];[b]field=bottom,split[b1][b2];[a1][b1]psnr[c1];[c1][b2]ssim" -show_frames -show_versions -of xml=x=1:q=1 -noprivate | gzip > input_file.qctools.xml.gz

This will create an XML report for use in QCTools for a video file with one video track and NO audio track. See also the QCTools documentation.

- ffprobe

- starts the command

- -f lavfi

- tells ffprobe to use the Libavfilter input virtual device [more]

- -i

- input file and parameters

- "movie=input_file,signalstats=stat=tout+vrep+brng,cropdetect=reset=1:round=1,idet=half_life=1,split[a][b];[a]field=top[a1];[b]field=bottom,split[b1][b2];[a1][b1]psnr[c1];[c1][b2]ssim"

- This very large lump of commands declares the input file and passes in a request for all potential data signal information for a file with one video and one audio track

- -show_frames

- asks for information about each frame and subtitle contained in the input multimedia stream

- -show_versions

- asks for information related to program and library versions

- -of xml=x=1:q=1

- sets the data export format to XML

- -noprivate

- hides any private data that might exist in the file

- | gzip

- The | is to "pipe" (or push) the data into a compressed file format

>- redirects the standard output (the data made by ffprobe about the video)

- input_file.qctools.xml.gz

- names the zipped data output file, which can be named anything but needs the extension qctools.xml.gz for compatibility issues

Test files

Makes a mandelbrot test pattern video

ffmpeg -f lavfi -i mandelbrot=size=1280x720:rate=25 -c:v libx264 -t 10 output_file

- ffmpeg

- starts the command

- -f lavfi

- tells ffmpeg to use the Libavfilter input virtual device [more]

- -i mandelbrot=size=1280x720:rate=25

- asks for the mandelbrot test filter as input. Adjusting the

sizeandrateoptions allow you to choose a specific frame size and framerate. [more] - -c:v libx264

- transcodes video from rawvideo to H.264. Set

-pix_fmttoyuv420pfor greater H.264 compatibility with media players. - -t 10

- specifies recording time of 10 seconds

- output_file

- path, name and extension of the output file. Try different file extensions such as mkv, mov, mp4, or avi.

Makes a SMPTE bars test pattern video

ffmpeg -f lavfi -i smptebars=size=720x576:rate=25 -c:v prores -t 10 output_file

- ffmpeg

- starts the command

- -f lavfi

- tells ffmpeg to use the Libavfilter input virtual device [more]

- -i smptebars=size=720x576:rate=25

- asks for the smptebars test filter as input. Adjusting the

sizeandrateoptions allow you to choose a specific frame size and framerate. [more] - -c:v prores

- transcodes video from rawvideo to Apple ProRes 4:2:2.

- -t 10

- specifies recording time of 10 seconds

- output_file

- path, name and extension of the output file. Try different file extensions such as mov or avi.

Make a test pattern video

ffmpeg -f lavfi -i testsrc=size=720x576:rate=25 -c:v v210 -t 10 output_file

- ffmpeg

- starts the command

- -f lavfi

- tells ffmpeg to use the libavfilter input virtual device

- -i testsrc=size=720x576:rate=25

- asks for the testsrc filter pattern as input. Adjusting the

sizeandrateoptions allow you to choose a specific frame size and framerate.

The different test patterns that can be generated are listed here. - -c:v v210

- transcodes video from rawvideo to 10-bit Uncompressed YUV 4:2:2. Alter this setting to set your desired codec.

- -t 10

- specifies recording time of 10 seconds

- output_file

- path, name and extension of the output file. Try different file extensions such as mkv, mov, mp4, or avi.

Play HD SMPTE bars

Test an HD video projector by playing the SMPTE colour bars pattern.

ffplay -f lavfi -i smptehdbars=size=1920x1080

Play VGA SMPTE bars

Test a VGA (SD) video projector by playing the SMPTE colour bars pattern.

ffplay -f lavfi -i smptebars=size=640x480

- ffplay

- starts the command

- -f lavfi

- tells ffmpeg to use the libavfilter input virtual device [more]

- -i smptebars=size=640x480

- asks for the smptehdbars filter pattern as input and sets the VGA (SD) resolution. This generates a colour bars pattern, based on the SMPTE Engineering Guideline EG 1–1990. [more]

Sine wave

Generate a test audio file playing a sine wave.

ffmpeg -f lavfi -i "sine=frequency=1000:sample_rate=48000:duration=5" -c:a pcm_s16le output_file.wav

- ffmpeg

- starts the command

- -f lavfi

- tells ffmpeg to use the libavfilter input virtual device [more]

- -i "sine=frequency=1000:sample_rate=48000:duration=5"

- Sets the signal to 1000 Hz, sampling at 48 kHz, and for 5 seconds

- -c:a pcm_s16le

- encodes the audio codec in

pcm_s16le(the default encoding for wav files). pcm represents pulse-code modulation format (raw bytes),16means 16 bits per sample, andlemeans "little endian" - output_file.wav

- path, name and extension of the output file

Other

Join files together

ffmpeg -f concat -i mylist.txt -c copy output_file

This command takes two or more files of the same file type and joins them together to make a single file. All that the program needs is a text file with a list specifying the files that should be joined. However, it only works properly if the files to be combined have the exact same codec and technical specifications. Be careful, ffmpeg may appear to have successfully joined two video files with different codecs, but may only bring over the audio from the second file or have other weird behaviors. Don’t use this command for joining files with different codecs and technical specs and always preview your resulting video file!

- ffmpeg

- starts the command

- -f concat

- forces ffmpeg to concatenate the files and to keep the same file format

- -i mylist.txt

- path, name and extension of the input file. Per the ffmpeg documentation, it is preferable to specify relative rather than absolute file paths, as allowing absolute file paths may pose a security risk.

This text file contains the list of files to be concatenated and should be formatted as follows:file './first_file.ext' file './second_file.ext' . . . file './last_file.ext'

In the above, file is simply the word "file". Straight apostrophes ('like this') rather than curved quotation marks (‘like this’) must be used to enclose the file paths.

Note: If specifying absolute file paths in the .txt file, add-safe 0before the input file.

e.g.:ffmpeg -f concat -safe 0 -i mylist.txt -c copy output_file - -c copy

- use stream copy mode to re-mux instead of re-encode

- output_file

- path, name and extension of the output file

For more information, see the ffmpeg wiki page on concatenating files.

Play an image sequence

Play an image sequence directly as moving images, without having to create a video first.

ffplay -framerate 5 input_file_%06d.ext

- ffplay

- starts the command

- -framerate 5

- plays image sequence at rate of 5 images per second

Note: this low framerate will produce a slideshow effect. - -i input_file

- path, name and extension of the input file

This must match the naming convention used! The regex %06d matches six-digit-long numbers, possibly with leading zeroes. This allows the full sequence to be read in ascending order, one image after the other.

The extension for TIFF files is .tif or maybe .tiff; the extension for DPX files is .dpx (or even .cin for old files). Screenshots are often in .png format.

Notes:

If -framerate is omitted, the playback speed depends on the images’ file sizes and on the computer’s processing power. It may be rather slow for large image files.

You can navigate durationally by clicking within the playback window. Clicking towards the left-hand side of the playback window takes you towards the beginning of the playback sequence; clicking towards the right takes you towards the end of the sequence.

Split audio and video tracks

ffmpeg -i input_file -map 0:v video_output_file -map 0:a audio_output_file

This command splits the original input file into a video and audio stream. The -map command identifies which streams are mapped to which file. To ensure that you’re mapping the right streams to the right file, run ffprobe before writing the script to identify which streams are desired.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -map 0:v:0

- grabs the first video stream and maps it into:

- video_output_file

- path, name and extension of the video output file

- -map 0:a:0

- grabs the first audio stream and maps it into:

- audio_output_file

- path, name and extension of the audio output file

Combine audio tracks into one in a video file

ffmpeg -i input_file -filter_complex "[0:a:0][0:a:1]amerge[out]" -map 0:v -map "[out]" -c:v copy -shortest output_file

This command combines two audio tracks present in a video file into one stream. It can be useful in situations where a downstream process, like YouTube’s automatic captioning, expect one audio track. To ensure that you’re mapping the right audio tracks run ffprobe before writing the script to identify which tracks are desired. More than two audio streams can be combined by extending the pattern present in the -filter_complex option.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -filter_complex [0:a:0][0:a:1]amerge[out]

- combines the two audio tracks into one

- -map 0:v

- map the video

- -map "[out]"

- map the combined audio defined by the filter

- -c:v copy

- copy the video

- -shortest

- limit to the shortest stream

- video_output_file

- path, name and extension of the video output file

Extract audio from an AV file

ffmpeg -i input_file -c:a copy -vn output_file

This command extracts the audio stream without loss from an audiovisual file.

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -c:a copy

- re-encodes using the same audio codec

- -vn

- no video stream

- output_file

- path, name and extension of the output file

Flip the video image horizontally and/or vertically

ffmpeg -i input_file -filter:v "hflip,vflip" -c:a copy output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -filter:v "hflip,vflip"

- flips the image horizontally and vertically

By using only one of the parameters hflip or vflip for filtering the image is flipped on that axis only. The quote marks are not mandatory. - -c:a copy

- re-encodes using the same audio codec

For silent videos you can replace-c:a copyby-an. - output_file

- path, name and extension of the output file

Modify image and sound speed

E.g. for converting 24fps to 25fps with audio pitch compensation for PAL access copies. (Thanks @kieranjol!)

ffmpeg -i input_file -filter_complex "[0:v]setpts=input_fps/output_fps*PTS[v]; [0:a]atempo=output_fps/input_fps[a]" -map "[v]" -map "[a]" output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -filter_complex "[0:v]setpts=input_fps/output_fps*PTS[v]; [0:a]atempo=output_fps/input_fps[a]"

- A complex filter is needed here, in order to handle video stream and the audio stream separately. The

setptsvideo filter modifies the PTS (presentation time stamp) of the video stream, and theatempoaudio filter modifies the speed of the audio stream while keeping the same sound pitch. Note that the parameter’s order for the image and for the sound are inverted:- In the video filter

setptsthe numeratorinput_fpssets the input speed and the denominatoroutput_fpssets the output speed; both values are given in frames per second. - In the sound filter

atempothe numeratoroutput_fpssets the output speed and the denominatorinput_fpssets the input speed; both values are given in frames per second.

- In the video filter

- -map "[v]"

- maps the video stream and:

- -map "[a]"

- maps the audio stream together into:

- output_file

- path, name and extension of the output file

Create centered, transparent text watermark

E.g For creating access copies with your institutions name

ffmpeg -i input_file -vf drawtext="fontfile=font_path:fontsize=font_size:text=watermark_text:fontcolor=font_colour:alpha=0.4:x=(w-text_w)/2:y=(h-text_h)/2" output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -vf drawtext=

- This calls the drawtext filter with the following options:

- fontfile=font_path

- Set path to font. For example in macOS:

fontfile=/Library/Fonts/AppleGothic.ttf - fontsize=font_size

- Set font size.

35is a good starting point for SD. Ideally this value is proportional to video size, for example use ffprobe to acquire video height and divide by 14. - text=watermark_text

- Set the content of your watermark text. For example:

text='FFMPROVISR EXAMPLE TEXT' - fontcolor=font_colour

- Set colour of font. Can be a text string such as

fontcolor=whiteor a hexadecimal value such asfontcolor=0xFFFFFF - alpha=0.4

- Set transparency value.

- x=(w-text_w)/2:y=(h-text_h)/2

- Sets x and y coordinates for the watermark. These relative values will centre your watermark regardless of video dimensions.

-vfis a shortcut for-filter:v. - output_file

- path, name and extension of the output file.

Overlay image watermark on video

ffmpeg -i input_video file -i input_image_file -filter_complex overlay=main_w-overlay_w-5:5 output_file

- ffmpeg

- starts the command

- -i input_video_file

- path, name and extension of the input video file

- -i input_image_file

- path, name and extension of the image file

- -filter_complex overlay=main_w-overlay_w-5:5

- This calls the overlay filter and sets x and y coordinates for the position of the watermark on the video. Instead of hardcoding specific x and y coordinates,

main_w-overlay_w-5:5uses relative coordinates to place the watermark in the upper right hand corner, based on the width of your input files. Please see the ffmpeg documentation for more examples. - output_file

- path, name and extension of the output file

Create a burnt in timecode on your image

ffmpeg -i input_file -vf drawtext="fontfile=font_path:fontsize=font_size:timecode=starting_timecode:fontcolor=font_colour:box=1 :boxcolor=box_colour:rate=timecode_rate:x=(w-text_w)/2:y=h/1.2" output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -vf drawtext=

- This calls the drawtext filter with the following options:

- fontfile=font_path

- Set path to font. For example in macOS:

fontfile=/Library/Fonts/AppleGothic.ttf - fontsize=font_size

- Set font size.

35is a good starting point for SD. Ideally this value is proportional to video size, for example use ffprobe to acquire video height and divide by 14. - timecode=starting_timecode

- Set the timecode to be displayed for the first frame. Timecode is to be represented as

hh:mm:ss[:;.]ff. Colon escaping is determined by O.S, for example in Ubuntutimecode='09\\:50\\:01\\:23'. Ideally, this value would be generated from the file itself using ffprobe. - fontcolor=font_colour

- Set colour of font. Can be a text string such as

fontcolor=whiteor a hexadecimal value such asfontcolor=0xFFFFFF - box=1

- Enable box around timecode

- boxcolor=box_colour

- Set colour of box. Can be a text string such as

fontcolor=blackor a hexadecimal value such asfontcolor=0x000000 - rate=timecode_rate

- Framerate of video. For example

25/1 - x=(w-text_w)/2:y=h/1.2

- Sets x and y coordinates for the timecode. These relative values will horizontally centre your timecode in the bottom third regardless of video dimensions.

-vfis a shortcut for-filter:v. - output_file

- path, name and extension of the output file.

Transcode an image sequence into uncompressed 10-bit video

ffmpeg -f image2 -framerate 24 -i input_file_%06d.ext -c:v v210 -an output_file

- ffmpeg

- starts the command

- -f image2

- forces the image file de-muxer for single image files

- -framerate 24

- Sets the input framerate to 24 fps. The image2 demuxer defaults to 25 fps.

- -i input_file

- path, name and extension of the input file

This must match the naming convention actually used! The regex %06d matches six digits long numbers, possibly with leading zeroes. This allows to read in ascending order, one image after the other, the full sequence inside one folder. For image sequences starting with 086400 (i.e. captured with a timecode starting at 01:00:00:00 and at 24 fps), add the flag-start_number 086400before-i input_file_%06d.ext. The extension for TIFF files is .tif or maybe .tiff; the extension for DPX files is .dpx (or eventually .cin for old files). - -c:v v210

- encodes an uncompressed 10-bit video stream

- -an copy

- no audio

- output_file

- path, name and extension of the output file

Create a video from an image and audio file.

ffmpeg -r 1 -loop 1 -i image_file -i audio_file -acodec copy -shortest -vf scale=1280:720 output_file

This command will take an image file (e.g. image.jpg) and an audio file (e.g. audio.mp3) and combine them into a video file that contains the audio track with the image used as the video. It can be useful in a situation where you might want to upload an audio file to a platform like YouTube. You may want to adjust the scaling with -vf to suit your needs.

- ffmpeg

- starts the command

- -r 1

- set the framerate

- -loop 1

- loop the first input stream

- -i image_file

- path, name and extension of the image file

- -i audio_file

- path, name and extension of the audio file

- -acodec copy

- copy the audio. -acodec is an alias for -c:a

- -shortest

- finish encoding when the shortest input stream ends

- -vf scale=1280:720

- filter the video to scale it to 1280x720 for YouTube. -vf is an alias for -filter:v

- video_output_file

- path, name and extension of the video output file

Change field order of an interlaced video

ffmpeg -i input_file -c:v prores -filter:v setfield=tff output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -filter:v setfield=tff

- Sets the field order to top field first. Use

setfield=bfffor bottom field first. - -c:v prores

- Tells ffmpeg to transcode the video stream into Apple ProRes 422. Experiment with using other codecs.

- output_file

- path, name and extension of the output file

Set stream properties

Find undetermined or unknown stream properties

These examples use QuickTime inputs and outputs. The strategy will vary or may not be possible in other file formats. In the case of these examples it is the intention to make a lossless copy while clarifying an unknown characteristic of the stream.

ffprobe input_file -show_streams

- ffprobe

- starts the command

- input_file

- path, name and extension of the input file

- -show_streams

- Shows metadata of stream properties

Values that are set to 'unknown' and 'undetermined' may be unspecified within the stream. An unknown aspect ratio would be expressed as '0:1'. Streams with many unknown properties may have interoperability issues or not play as intended. In many cases, an unknown or undetermined value may be accurate because the information about the source is unclear, but often the value is intended to be known. In many cases the stream will played with an assumed value if undetermined (for instance a display_aspect_ratio of '0:1' may be played as 'WIDTH:HEIGHT'), but this may or may not be what is intended. Use carefully.

Set aspect ratio

If the display_aspect_ratio is set to '0:1' it may be clarified with the -aspect option and stream copy.

ffmpeg -i input_file -c copy -map 0 -aspect DAR_NUM:DAR_DEN output_file

- ffmpeg

- starts the command

- -i input_file

- path, name and extension of the input file

- -c copy

- Usings stream copy for all streams

- -map 0

- Tells ffmpeg to map all streams of the input to the output.

- -aspect DAR_NUM:DAR_DEN

- Replace DAR_NUM with the display aspect ratio numerator and DAR_DEN with the display aspect ratio denominator, such as -aspect 4:3 or -aspect 16:9.

- output_file

- path, name and extension of the output file

Adding other stream properties.

Other properties may be clarified in a similar way. Replace -aspect and its value with other properties such as shown in the options below. Note that setting color values in QuickTime requires that -movflags write_colr is set.

- -color_primary VALUE -movflags write_colr

- Set a new color_primary value. The vocabulary for values is at ffmpeg.

- -color_trc VALUE -movflags write_colr

- Set a new color_transfer value. The vocabulary for values is at ffmpeg.

- -field_order VALUE

- Set interlacement values. The vocabulary for values is at ffmpeg.

View information about a specific format

ffmpeg -h type=name

- ffmpeg

- starts the command

- -h

- Call the help option

- type=name

- Tells ffmpeg which kind of option you want, for example:

encoder=libx264decoder=mp3muxer=matroskademuxer=movfilter=crop

Made with ♥ at AMIA #AVhack15! Contribute to the project via our GitHub page!